Monitoring Kubernetes with AppOptics

AppOptics provides both monitoring for Kubernetes as a cluster, as well as the containers and processes running inside it. Monitoring applications running in Kubernetes is done via our APM agents. To learn more about Kubernetes plugin please see its documentation.

The CRI+containerd and docker integrations can be used for more in-depth monitoring of containers running in Kubernetes, depending on which containers' runtime you use in your kubernetes cluster. See CRI + containerd plugin and Docker plugin instructions.

Setup

There are three basic ways to monitor a Kubernetes cluster with AppOptics: directly on a host with access to the Kubernetes cluster, as a pod, or as a sidecar.

Setup on the host

To monitor a Kubernetes cluster with the SolarWinds Snap Agent running on a host that has access to the cluster:

-

Grant the

solarwindsuser read permissions to thekubeconfigfile for the Kubernetes cluster and execute permissions on the directory where thekubeconfigfile is located.The

kubeconfigfile location may vary depending on your settings; a common location is$HOME/.kube/config. -

Set up the plugin config. Example plugin config:

collector: kubernetes: all: incluster: false kubeconfigpath: "//path_to_k8s_config/.kube/config" interval: 60s load: plugin: snap-plugin-collector-aokubernetes task: task-aokubernetes.yamlThe plugin config must include the

incluster: falsesetting, which denotes that the SolarWinds Snap Agent is not running in a pod in Kubernetes cluster -

You can confirm whether Kubernetes plugins are running using the swisnap CLI. For additional information on CLI, please see swisnap CLI.

swisnap task list -

Enable the Kubernetes plugin in the AppOptics Integrations page. Data from Kubernetes will appear in AppOptics shortly after enabling Kubernetes.

Setup in a pod

To monitor a Kubernetes cluster with the SolarWinds Snap Agent running in a Kubernetes pod:

-

Clone the solarwinds-snap-agent-docker repository:

git clone https://github.com/solarwinds/solarwinds-snap-agent-docker -

The Kubernetes assets in the solarwinds-snap-agent-docker repository expect a

solarwinds-tokensecret to exist. To create this secret run:kubectl create secret generic solarwinds-token -n kube-system --from-literal=SOLARWINDS_TOKEN=<REPLACE WITH TOKEN> -

(Optional) If your token for Loggly or Papertrail is different than your SolarWinds token, create a new Kubernetes secret to use the SolarWinds Snap Agent logs collector/forwarder with Loggly or Papertrail.

If your token for Loggly or Papertrail is the same as your SolarWinds token, no action is needed.

# setting for loggly-http, loggly-http-bulk, loggly-syslog Logs Publishers kubectl create secret generic loggly-token -n kube-system --from-literal=LOGGLY_TOKEN=<REPLACE WITH LOGGLY TOKEN> # setting for swi-logs-http-bulk, swi-logs-http Logs Publishers kubectl create secret generic papertrail-token -n kube-system --from-literal=PAPERTRAIL_TOKEN=<REPLACE WITH PAPERTRAIL TOKEN> # setting for papertrail-syslog publisher kubectl create secret generic papertrail-publisher-settings -n kube-system --from-literal=PAPERTRAIL_HOST=<REPLACE WITH PAPERTRAIL HOST> --from-literal=PAPERTRAIL_PORT=<REPLACE WITH PAPERTRAIL PORT>

Deployment

-

By default, RBAC is enabled in the deploy manifests. If you are not using RBAC you can deploy

swisnap-agent-deployment.yaml, removing the reference to the Service Account. -

Configure your plugins. In the

configMapGeneratorsection of kustomization.yaml, configure which plugins should run by settingSWISNAP_ENABLE_<plugin_name>to eithertrueorfalse.Plugins turned on via environment variables use the default configuration and taskfiles. To see a list of plugins currently supported this way please refer to: Environment parameters

-

After configuring the deployment and ensuring that the

solarwinds-tokensecret has been created, run:kubectl create -f swisnap-agent-deployment.yaml -

Check if the deployment is running properly:

kubectl get deployment swisnap-agent-k8s -n kube-system -

Enable the Kubernetes plugin in the AppOptics Integrations page. Data from Kubernetes will appear in AppOptics shortly after enabling Kubernetes.

DaemonSet

The DaemonSet will give you insight into the containers running within its nodes and gather system, processes, and docker-related metrics. To deploy the DaemonSet to Kubernetes

-

Verify you have an

solarwinds-tokensecret already created and run:kubectl apply -k ./deploy/overlays/stable/daemonset -

Enable the Docker plugin in the AppOptics Integrations page. Data will appear in AppOptics shortly after enabling Kubernetes.

Setup on a sidecar

The SolarWinds Snap Agent can be run on a Kubernetes cluster as a sidecar to another pod. In this scenario, it monitors only services running in this particular pod, not the Kubernetes cluster itself. The configuration is similar to the pod setup, however to monitor only specific services disable kubernetes and aosystem plugins by setting SWISNAP_ENABLE_KUBERNETES to false and SWISNAP_DISABLE_HOSTAGENT to true in swisnap-agent-deployment.yaml.

Containers inside the same pod can communicate through the localhost, so there's no need to pass a static IP. For more information, refer to the Kubernetes documentation for Resource sharing and communication.

To monitor services with the SolarWinds Snap Agent as a sidecare, add a second container to your deployment YAML underneath spec.template.spec.containers to give the Snap Agent access to your service over localhost (notice SWISNAP_ENABLE_APACHE):

These instructions use an Apache Server, to use a different server modify the YAML to fit your specific setup.

containers:

- name: apache

imagePullPolicy: Always

image: '<your-image>'

ports:

- containerPort: 80

- name: swisnap-agent-ds

image: 'solarwinds/solarwinds-snap-agent-docker:latest'

imagePullPolicy: Always

env:

- name: SOLARWINDS_TOKEN

value: 'SOLARWINDS_TOKEN'

- name: APPOPTICS_HOSTNAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: SWISNAP_ENABLE_DOCKER

value: 'false'

- name: SWISNAP_ENABLE_APACHE

value: 'true'

- name: SWISNAP_DISABLE_HOSTAGENT

value: 'true'

- name: HOST_PROC

value: '/host/proc'In the example above, the sidecar will run only the Apache plugin. If the default Apache Plugin configuration is not sufficient, a custom one should be passed to the pod running the SolarWinds Snap Agent. See Integrating custom plugins configuration with the Snap Agent deployment for more information.

Integrating custom plugins configuration with the Snap Agent deployment

These instructions are for the Snap Agent running inside a pod. If the Snap Agent is set up on a host, see Integrations for information about enabling the available plugins.

When SolarWinds Snap Agent is running inside a Kubernetes pod, integrating AppOptics Plugins with Kubernetes requires some additional steps. SolarWinds Snap Agent image is using default plugins configuration files and tasks manifests. Create a Kubernetes configMap to use your own configuration.

How to create the configMaps varies depending on the version of the plugin used. See Plugin Version 1 or Plugin Version 2 for detailed instructions.

Plugin Version 1

Set up two configMaps, one for the SolarWinds Snap Agent Kubernetes plugin config and another for a corresponding task:

-

Create a configMap using a task manifest and plugin config.

# create plugin configMap kubectl create configmap kubernetes-plugin-config --from-file=/path/to/my/plugins.d/kubernetes.yaml --namespace=kube-system # create task configMap kubectl create configmap kubernetes-task-manifest --from-file=/path/to/my/tasks.d/task-aokubernetes.yaml --namespace=kube-system # check if everything is fine kubectl describe configmaps --namespace=kube-system kubernetes-task-manifest kubernetes-plugin-config -

Attach the configMaps to the SolarWinds Snap Agent deployment. In this example, notice

spec.template.spec.containers.volumeMountsandspec.template.spec.volumes:diff --git a/deploy/base/deployment/kustomization.yaml b/deploy/base/deployment/kustomization.yaml index 79e0110..000a108 100644 --- a/deploy/base/deployment/kustomization.yaml +++ b/deploy/base/deployment/kustomization.yaml @@ -15,7 +15,7 @@ configMapGenerator: - SWISNAP_ENABLE_APACHE=false - SWISNAP_ENABLE_DOCKER=false - SWISNAP_ENABLE_ELASTICSEARCH=false - - SWISNAP_ENABLE_KUBERNETES=true + - SWISNAP_ENABLE_KUBERNETES=false - SWISNAP_ENABLE_PROMETHEUS=false - SWISNAP_ENABLE_MESOS=false - SWISNAP_ENABLE_MONGODB=false diff --git a/deploy/base/deployment/swisnap-agent-deployment.yaml b/deploy/base/deployment/swisnap-agent-deployment.yaml index 294c4b4..babff7d 100644 --- a/deploy/base/deployment/swisnap-agent-deployment.yaml +++ b/deploy/base/deployment/swisnap-agent-deployment.yaml @@ -45,6 +45,12 @@ spec: - configMapRef: name: swisnap-k8s-configmap volumeMounts: + - name: kubernetes-plugin-vol + mountPath: /opt/SolarWinds/Snap/etc/plugins.d/kubernetes.yaml + subPath: kubernetes.yaml + - name: kubernetes-task-vol + mountPath: /opt/SolarWinds/Snap/etc/tasks.d/task-aokubernetes.yaml + subPath: task-aokubernetes.yaml - name: proc mountPath: /host/proc readOnly: true @@ -56,6 +62,18 @@ spec: cpu: 100m memory: 256Mi volumes: + - name: kubernetes-plugin-vol + configMap: + name: kubernetes-plugin-config + items: + - key: kubernetes.yaml + path: kubernetes.yaml + - name: kubernetes-task-vol + configMap: + name: kubernetes-task-manifest + items: + - key: task-aokubernetes.yaml + path: task-aokubernetes.yaml - name: proc hostPath: path: /proc

[Environment Parameters](#environment-parameters) are not needed to turn on the Kubernetes plugins. When you're attaching taskfiles and plugin configuration files through configMaps, there's no need to set the environment variables SWISNAP_ENABLE_<plugin-name>. The SolarWinds Snap Agent automatically loads plugins based on the files stored in configMaps.

Plugin Version 2

-

Set up one configMap for the SolarWinds Snap Agent Kubernetes logs collector/forwarder task configuration.

# create task configuration configMap for Plugin v2 kubectl create configmap logs-task-config --from-file=/path/to/my/task-autoload.d/task-logs-k8s-events.yaml --namespace=kube-system -

Attach the configMaps to the SolarWinds Snap Agent deployment. In this example, notice

spec.template.spec.containers.volumeMountsandspec.template.spec.volumesdiff --git a/deploy/base/deployment/swisnap-agent-deployment.yaml b/deploy/base/deployment/swisnap-agent-deployment.yaml index 294c4b4..babff7d 100644 --- a/deploy/base/deployment/swisnap-agent-deployment.yaml +++ b/deploy/base/deployment/swisnap-agent-deployment.yaml @@ -45,6 +45,12 @@ spec: - configMapRef: name: swisnap-k8s-configmap volumeMounts: + - name: logs-task-vol + mountPath: /opt/SolarWinds/Snap/etc/tasks-autoload.d/task-logs-k8s-events.yaml + subPath: task-logs-k8s-events.yaml - name: proc mountPath: /host/proc readOnly: true @@ -56,6 +62,18 @@ spec: cpu: 100m memory: 256Mi volumes: + - name: logs-task-vol + configMap: + name: logs-task-config + items: + - key: task-logs-k8s-events.yaml + path: task-logs-k8s-events.yaml - name: proc hostPath: path: /proc

[Environment Parameters](#environment-parameters) are not needed to turn on the Logs plugin. When you're attaching task configuration files through configMaps, there's no need to set the environment variables SWISNAP_ENABLE_<plugin-name>. The SolarWinds Snap Agent automatically loads plugins based on the files stored in configMaps and mounted to /opt/SolarWinds/Snap/etc/tasks-autoload.d/ in the container.

Integrating Kubernetes Cluster Events Collection With Loggly

Starting with the SolarWinds Snap Agent release 4.1.0, you can collect cluster events and push them to Loggly using the logs collector included with the Snap Agent. There are two different ways to enable this functionality - one with enabling default forwarder for Snap Deployment, in which there will be monitored Normal events in default namespace. The second option is more advanced and require create corresponding configmaps in your cluster, with proper task configuration. This way allows you to manually edit this configuration, with option to modify both desired event filters, monitored Kubernetes namespace and to select desired publisher.

Default Kubernetes log forwarder

Enable the default Kubernetes log forwarder:

-

Clone the

solarwinds-snap-agent-dockerrepositorygit clone https://github.com/solarwinds/solarwinds-snap-agent-docker -

Create a Kubernetes secret for

SOLARWINDS_TOKEN:kubectl create secret generic solarwinds-token -n kube-system --from-literal=SOLARWINDS_TOKEN=<YOUR_SOLARWINDS_TOKEN>Replace:

- <YOUR_SOLARWINDS_TOKEN>: your SolarWinds AppOptics token.

-

(Optional) If your token for Loggly or Papertrail is different than your SolarWinds token, create a new Kubernetes secret to use the SolarWinds Snap Agent logs collector/forwarder with Loggly or Papertrail.

If your token for Loggly is the same as your SolarWinds token, no action is needed.

kubectl create secret generic loggly-token -n kube-system --from-literal=LOGGLY_TOKEN=<YOUR_LOGGLY_TOKEN>Replace:

-

<YOUR_LOGGLY_TOKEN>: your Loggly token.

-

-

Edit kustomization.yaml for Snap Agent deployment and set

SWISNAP_ENABLE_KUBERNETES_LOGStotrue.index 8b5d94b..f3aac10 100644 --- a/deploy/overlays/stable/deployment/kustomization.yaml +++ b/deploy/overlays/stable/deployment/kustomization.yaml @@ -9,7 +9,7 @@ configMapGenerator: - name: swisnap-k8s-configma behavior: merge literals: - - SWISNAP_ENABLE_KUBERNETES_LOGS=false + - SWISNAP_ENABLE_KUBERNETES_LOGS=true images: - name: solarwinds/solarwinds-snap-agent-docker -

Create a Snap Agent Deployment, which will automatically create a corresponding ServiceAccount:

kubectl apply -k ./deploy/overlays/stable/events-collector/

Watch your cluster events in Loggly.

Advanced configuration for Kubernetes log forwarder

To use a custom task configuration for a Kubernetes log forwarder, create corresponding configmaps in your cluster with the proper task configuration. The example config file can be found in [Event collector configs](examples/event-collector-configs). To enable the event collector in your deployment:

-

Clone the

solarwinds-snap-agent-dockerrepositorygit clone https://github.com/solarwinds/solarwinds-snap-agent-docker -

Create a Kubernetes secret for

SOLARWINDS_TOKEN:kubectl create secret generic solarwinds-token -n kube-system --from-literal=SOLARWINDS_TOKEN=<YOUR_SOLARWINDS_TOKEN>Replace:

- <YOUR_SOLARWINDS_TOKEN>: your SolarWindsAppOptics token.

-

(Optional) If your token for Loggly is different than your SolarWinds token, create a new Kubernetes secret to use the SolarWinds Snap Agent logs collector/forwarder with Loggly or Papertrail.

If your token for Loggly is the same as your SolarWinds token, no action is needed.

# setting for loggly-http, loggly-http-bulk, loggly-syslog Logs Publishers kubectl create secret generic loggly-token -n kube-system --from-literal=LOGGLY_TOKEN=<REPLACE WITH LOGGLY TOKEN> # setting for swi-logs-http-bulk, swi-logs-http Logs Publishers kubectl create secret generic papertrail-token -n kube-system --from-literal=PAPERTRAIL_TOKEN=<REPLACE WITH PAPERTRAIL TOKEN> # setting for papertrail-syslog publisher kubectl create secret generic papertrail-publisher-settings -n kube-system --from-literal=PAPERTRAIL_HOST=<REPLACE WITH PAPERTRAIL HOST> --from-literal=PAPERTRAIL_PORT=<REPLACE WITH PAPERTRAIL PORT>Replace the fields for the Loggly or Papertrail tokens, hosts, or ports with the correct settings.

-

Update the task-logs-k8s-events.yaml file as needed to configure the Kubernetes Events Log task. This config contains a

plugins.config.filtersfield where you can specify a filter to monitor event types or namespaces. If necessary, you can also set a different publisher plugin, for more information see Publishers.This example filter event collector will watch for

Normalevents in thedefaultnamespace.version: 2 schedule: type: streaming plugins: - plugin_name: k8s-events config: incluster: true filters: - namespace: default watch_only: true options: fieldSelector: "type==Normal" #- namespace: kube-system # watch_only: true # options: # fieldSelector: "type==Warning" #tags: # /k8s-events/[namespace=my_namespace]/string_line: # sometag: somevalue publish: - plugin_name: loggly-http-bulk # this could be set to any other Logs Publisher -

Create a configMap to correspond to the Kubernetes Events Log task configuration:

kubectl create configmap task-autoload --from-file=./examples/event-collector-configs/task-logs-k8s-events.yaml --namespace=kube-system kubectl describe configmaps -n kube-system task-autoload -

Create a Snap Agent Deployment, which will automatically create a corresponding ServiceAccount:

kubectl apply -k ./deploy/overlays/stable/events-collector/

Watch your cluster events in Loggly or Papertrail.

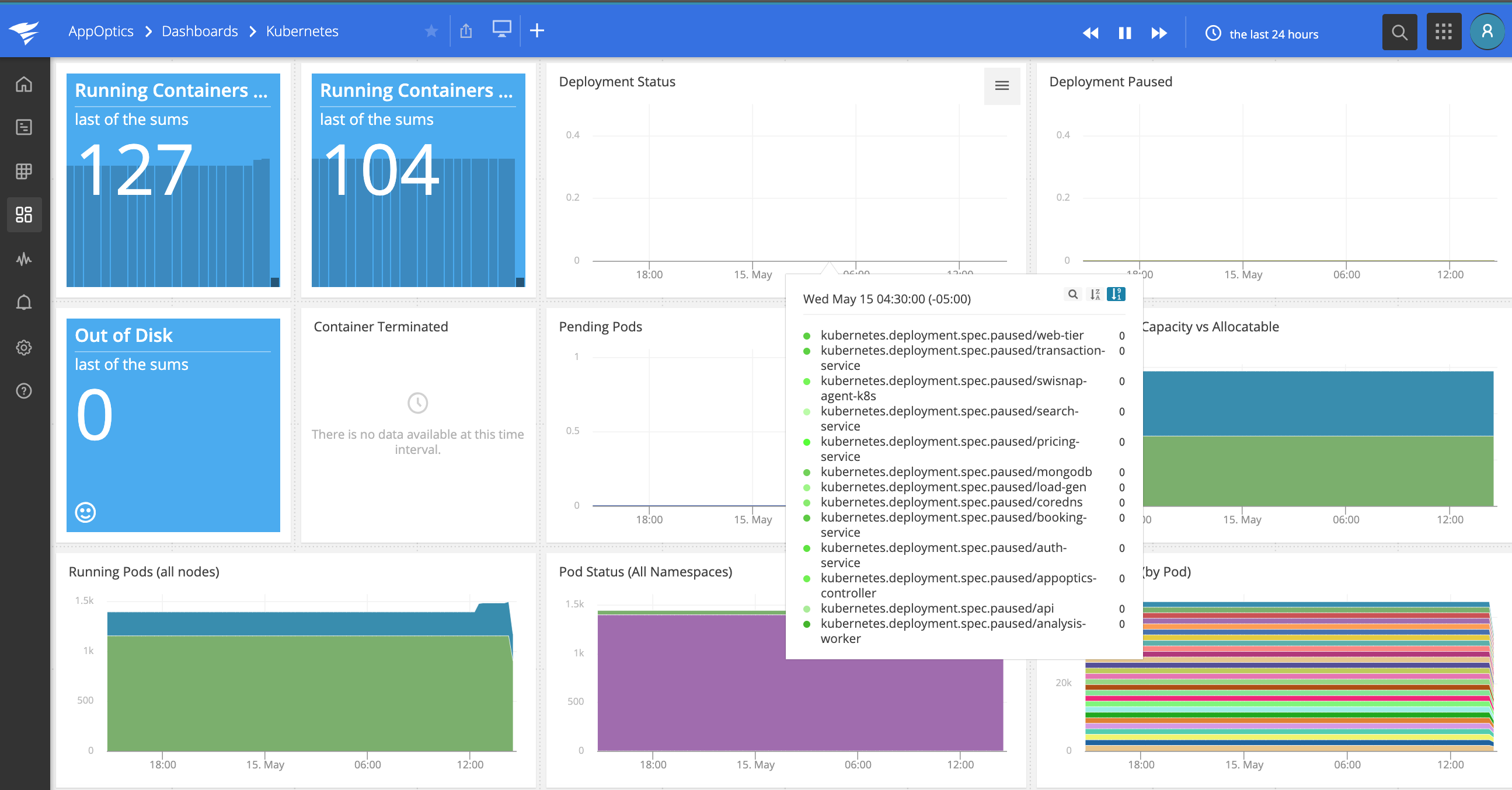

Dashboard

After you've successfully set up the SolarWinds Snap Agent, enable the Kubernetes plugin in the AppOptics UI and you should see data pour in. More about Charts and Dashboards.

Navigation Notice: When the APM Integrated Experience is enabled, AppOptics shares a common navigation and enhanced feature set with other integrated experience products. How you navigate AppOptics and access its features may vary from these instructions.

The scripts are not supported under any SolarWinds support program or service. The scripts are provided AS IS without warranty of any kind. SolarWinds further disclaims all warranties including, without limitation, any implied warranties of merchantability or of fitness for a particular purpose. The risk arising out of the use or performance of the scripts and documentation stays with you. In no event shall SolarWinds or anyone else involved in the creation, production, or delivery of the scripts be liable for any damages whatsoever (including, without limitation, damages for loss of business profits, business interruption, loss of business information, or other pecuniary loss) arising out of the use of or inability to use the scripts or documentation.